Posture recognition with Kinect II

In this second article in the series about recognition of postures using the Microsoft Kinect sensor I will show generic classes and structs used to isolate the application from the different versions of the SDK. The version of the SDK that we must use depends on the sensor model that we have. In the example code I have used version 2.0, for the Xbox One sensor.

In this link you can find the first article in this series. In this another one you can download the source code of the KinectGestures solution, written in csharp with Visual Studio 2015.

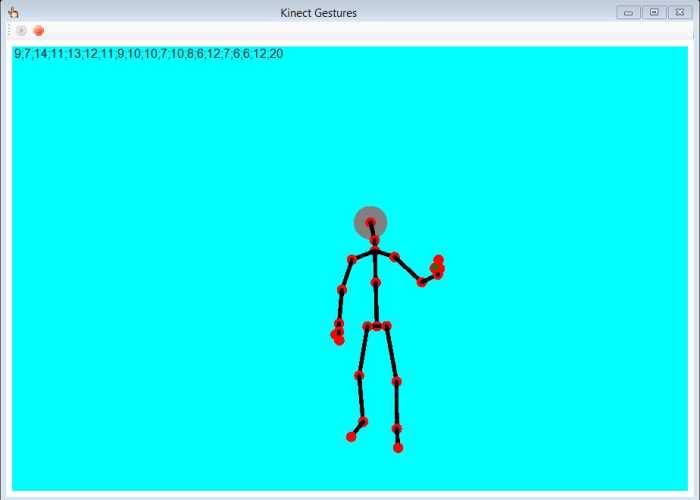

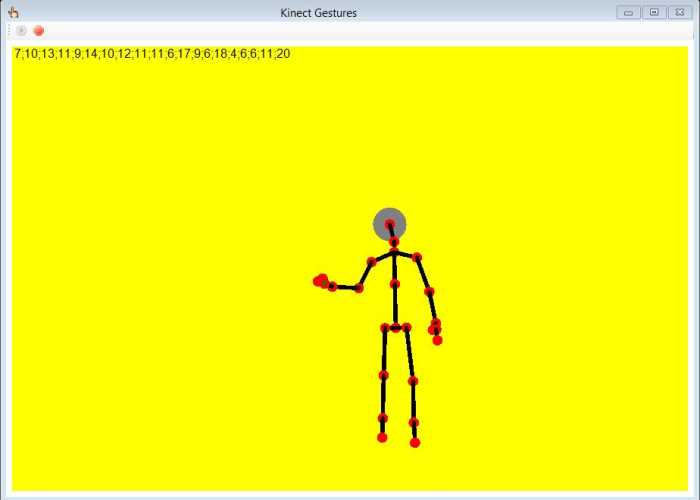

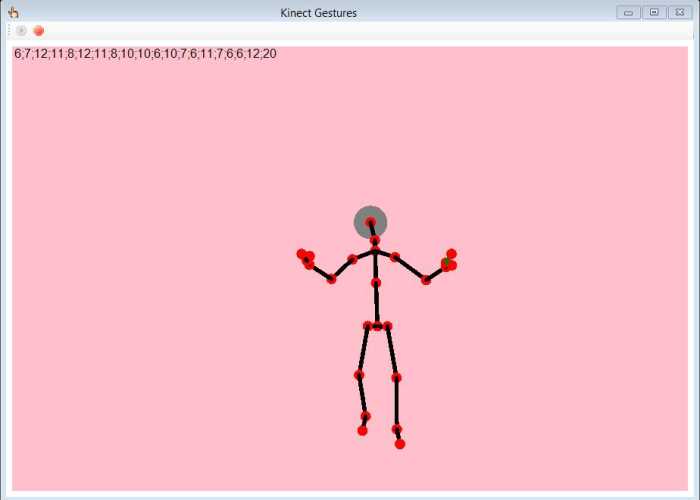

It is a very simple application in which we only have a toolbar with two buttons, one to start the sensor and another one to stop it. In the upper left corner a text with the normalized body position is shown and in the rest of the window the complete skeleton is drawn in its current position.

The edges of the window are marked in red when the sensor detects that the body is leaving the frame by one of the four directions, left, right, up or down.

The bottom of the window will change color when the angle of any of the two forearms takes an angle close to 90 degrees. Cyan for the right arm:

Yellow for the left arm:

And pink for both arms at once:

The KinectInterface class library

The KinectInterface project is a library of generic classes that prevents the main application from being dependent on the data and classes of each of the different Kinect SDK versions. It also provides a generic three dimensional vector structure to facilitate the operations of normalization of the body, and specialized classes that perform such normalization.

The Vector3D struct

It is a specialized struct to operate with three dimensional vectors, mainly obtaining the angle that forms a vector with any of the three coordinate axes and rotating it around each one of them.

The properties of this struct are simply the X, Y and Z coordinates of the vector, with float data type.

The XYNormalizedPosition, ZYNormalizedPosition and XZNormalizedPosition methods are used to project the vector into one of the three coordinate planes and convert this projection into a unitary vector, whereby we obtain the sine and cosine of the angle it forms with the two corresponding coordinate axes of that plane. For example, with XYNormalizedPosition the vector will be projected on the plane formed by the X and Y axes, and in the X coordinate of the resulting vector we will have the cosine of the angle that it forms with the X axis and in the Y coordinate the sine of that angle, or in Y we will have the cosine of the angle with the Y axis and in X the sine.

With the InvertXY, InvertZY and InvertXZ methods one of the coordinates of the vector is inverted to obtain a mirror image with respect to one of the planes. For example, with InvertXY we invert the Z coordinate.

The RotateXCC, RotateYCC and RotateZCC methods are used to rotate a certain angle counterclockwise around one of the three coordinate axes. The arguments are the cosine and the sine of that angle. For example, RotateXCC rotates the vector using the X axis as the rotation axis. To rotate clockwise there are three other methods, RotateXTC, RotateYTC and RotateZTC.

Finally, the operator - allows obtaining the difference between two vectors.

Enums and auxiliary data structs

The Joint enum defines all the different joints of the body. HandSt is an enum for the different states of the hands. BodyPart is another enum with different composite parts of the body, such as the forearm, the full arm or the full arm plus the hand. This enum is used to obtain the points of one of these parts in order to perform its normalization.

BodyPoint is a struct used to store the data of one of the joints of the body. The Accuracy property gives a value that represents the precision with which the sensor has determined its position: -1 if it has not been determined, 0 if the position is inferred or 1 if it has been possible to determine it accurately. There is also the Vector property, of type Vector3D, with the coordinates in three dimensions of the point, and Point, of type PointF, with the coordinates projected in two dimensions to be able to draw the point on the screen.

Finally, the ClippedEdges struct is used to indicate whether the body is leaving the sensor frame left, right, up or down.

The BodyVector class

The BodyVector class represents an array of BodyPoint structs with all the joints of the body. It provides the state of the hands in the LeftHand and RightHand properties, the location of the body with respect to the edges of the sensor frame with the Clip property, in the Time property it gives the time the body position has been taken, and the total size of the sensor frame in the Size property, to help us scale the skeleton drawing.

The GetBodyPart method is used to obtain an array of BodyPoint structs with the points of a certain part of the body.

In the next article I will show the Kinect sensor access encapsulation.